October 21, 2024

FIMI-ISAC Collective Findings Report on 2024 European Elections

The Foreign Information Manipulation and Interference-Information Sharing and Analysis Center, or FIMI-ISAC, of which GDI is a member, has published a report compiling the different FIMI attacks during the first half of 2024. The case studies included campaigns (ab)using artificial intelligence tools to produce content, mimicking legitimate news sources, and disinformation campaigns targeting immigrants, gender, or public health.

In 2024 nearly half of the world's population will vote. The choices made and the elected representatives will shape global governance for decades. Foreign Information Manipulation and Interference campaigns can significantly threaten election integrity, with actors seeking to manipulate public opinion, influence voter behaviour, and undermine trust in electoral processes. The network of organisations that protect democratic societies and institutions, FIMI-ISAC, has published a report about the Foreign Information Manipulation and Interference attacks uncovered during the first half of 2024, focusing on the June 2024 EU Parliamentary elections. The full report includes cases such as “Doppelganger” and “Operation Overload”, identifies narratives tactics, techniques, and procedures (TTPs) and can be found on the FIMI-ISAC website and here we have summarized some of the core takeaways.

KEY FINDINGS

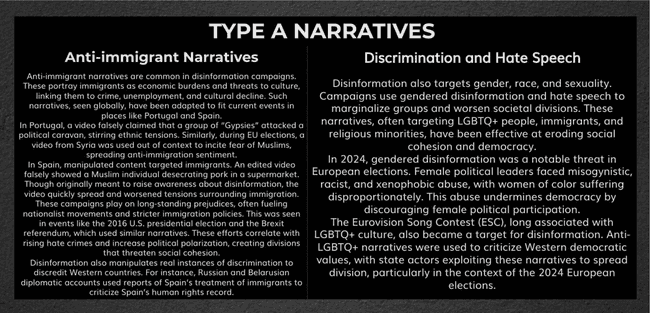

This analysis of the case studies FIMI – ISAC member organisations have uncovered reveals two types of disinformation narratives: Type A - exploits societal weaknesses to create division and Type B - erodes trust in democratic institutions. Together, these narratives manipulate public opinion and undermine democratic governance.

TYPE A NARRATIVES focus on societal vulnerabilities. They argue that outsiders and those different from the majority are threats to societal norms, using fear and prejudice to spread xenophobia, racism, and discrimination. These narratives have historically been used to create internal conflicts and distrust, especially in state-sponsored disinformation campaigns, such as Russia’s efforts to destabilize Western societies.

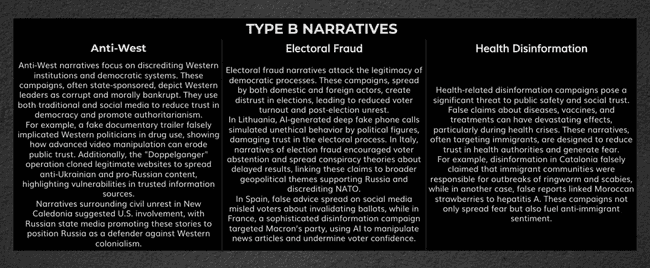

TYPE B NARRATIVES aim to break down trust in institutions. They attack electoral processes, government agencies, and public health systems, encouraging disengagement. This distrust creates a fertile ground for disinformation to “propagate."

Both of the narrative types work together in disinformation campaigns, criticizing Western countries for embracing non-traditional cultures and portraying marginalized groups as threats to traditional values. These tactics, seen in Russian disinformation strategies, have been used to discredit Western governance models and promote authoritarian systems as superior.

For example, Russia’s disinformation tactics during the 2016 U.S. elections and various European elections were aimed at delegitimizing democratic processes. Health-related disinformation, such as linking immigrants to public health risks, further erodes trust in public institutions.

Understanding the interactions of these narratives and their historical use can help the development of countermeasures to protect democratic institutions and societal cohesion.

Tactics, Techniques, and Procedures (DISARM TTPs)

Tactics, Techniques and Procedures or shorter, TTPs, used in the analysed case studies can be broadly categorised into those related to content production, establishment of legitimacy, content dissemination, and others available in the full report

Content production included the use of artificial intelligence in content creation, manipulation and editing and decontextualization. Inauthentic websites were created and legitimate entities were impersonated to establish legitimacy are some of the ways the actors used to establish legitimacy. The content they produced was disseminated using social media amplification among others by trolls and bots amplifying and manipulating and by using the existing networks to flood the information space by leveraging on existing inauthentic news sites. Understanding these TTPs can better counteract disinformation campaigns and their impacts.

CONCLUSION

The analysis of foreign information manipulation and interference (FIMI) case studies during European elections in 2024 reveals persistent use of divisive narratives, such as anti-immigrant sentiment, distrust in institutions, and health disinformation. Disinformation actors employed advanced tactics like AI-generated content, manipulated images, and deep fake technology, but many techniques remain well-established and used repeatedly across different platforms and countries.

FIMI-ISAC warns that despite the European Commission's guidelines under the Digital Services Act (DSA) for mitigating disinformation risks, the continued spread of false narratives highlights the insufficiency of voluntary measures. This points to the urgent need for a whole-of-society approach, involving governments, platforms, and civil society.

Key recommendations include dramatically increasing public awareness, investing in research and data collection tools, improving platform design, and developing alert systems to detect disinformation early. The persistent reappearance of the same tactics underscores vulnerabilities in current defences and calls for a comprehensive reevaluation of strategies to protect democratic processes and information integrity.

Download the report to read the full collective findings.